The most requested feature finally implemented. Tab Completion and command hinting, enjoy

Added

- Compatibility with more vendors:

• Turtle Beach (0x10f5)

• Hyperkin (0x2e24)

• Nacon (0x3285)

• BDA (0x20d6)

• 8BitDo (0x2dc8) - Share button input for Xbox Series X|S gamepads

- USB remote wakeup support (for gamepads)

- Guide button LED mode control via

sysfs - Fully featured driver for the wireless dongle 🎉

Fixed

- Build on newer kernels (5.15+)

- Sporadic headset malfunction due to

ENOSPC

tl;dr: A vandal deleted NewsBlur’s MongoDB database during a migration. No data was stolen or lost.

I’m in the process of moving everything on NewsBlur over to Docker containers in prep for a big redesign launching next week. It’s been a great year of maintenance and I’ve enjoyed the fruits of Ansible + Docker for NewsBlur’s 5 database servers (PostgreSQL, MongoDB, Redis, Elasticsearch, and soon ML models). The day was wrapping up and I settled into a new book on how to tame the machines once they’re smarter than us when I received a strange NewsBlur error on my phone.

"query killed during yield: renamed collection 'newsblur.feed_icons' to 'newsblur.system.drop.1624498448i220t-1.feed_icons'"

There is honestly no set of words in that error message that I ever want to see again. What is drop doing in that error message? Better go find out.

Logging into the MongoDB machine to check out what state the DB is in and I come across the following…

nbset:PRIMARY> show dbs

READ__ME_TO_RECOVER_YOUR_DATA 0.000GB

newsblur 0.718GB

nbset:PRIMARY> use READ__ME_TO_RECOVER_YOUR_DATA

switched to db READ__ME_TO_RECOVER_YOUR_DATA

nbset:PRIMARY> db.README.find()

{

"_id" : ObjectId("60d3e112ac48d82047aab95d"),

"content" : "All your data is a backed up. You must pay 0.03 BTC to XXXXXXFTHISGUYXXXXXXX 48 hours for recover it. After 48 hours expiration we will leaked and exposed all your data. In case of refusal to pay, we will contact the General Data Protection Regulation, GDPR and notify them that you store user data in an open form and is not safe. Under the rules of the law, you face a heavy fine or arrest and your base dump will be dropped from our server! You can buy bitcoin here, does not take much time to buy https://localbitcoins.com or https://buy.moonpay.io/ After paying write to me in the mail with your DB IP: FTHISGUY@recoverme.one and you will receive a link to download your database dump."

}Two thoughts immediately occured:

- Thank goodness I have some recently checked backups on hand

- No way they have that data without me noticing

Three and a half hours before this happened, I switched the MongoDB cluster over to the new servers. When I did that, I shut down the original primary in order to delete it in a few days when all was well. And thank goodness I did that as it came in handy a few hours later. Knowing this, I realized that the hacker could not have taken all that data in so little time.

With that in mind, I’d like to answer a few questions about what happened here.

- Was any data leaked during the hack? How do you know?

- How did NewsBlur’s MongoDB server get hacked?

- What will happen to ensure this doesn’t happen again?

Let’s start by talking about the most important question of all which is what happened to your data.

1. Was any data leaked during the hack? How do you know?

I can definitively write that no data was leaked during the hack. I know this because of two different sets of logs showing that the automated attacker only issued deletion commands and did not transfer any data off of the MongoDB server.

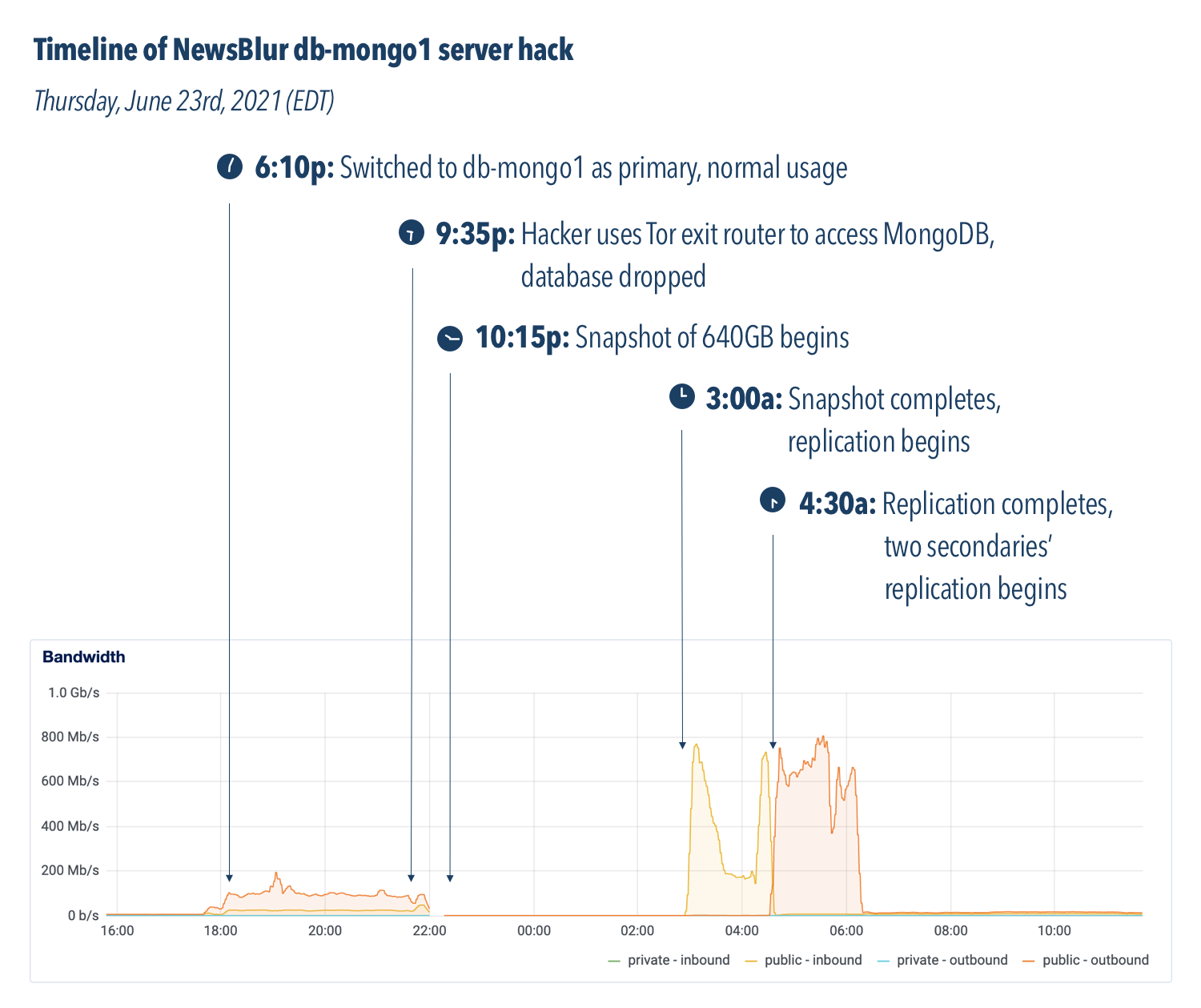

Below is a snapshot of the bandwidth of the db-mongo1 machine over 24 hours:

You can imagine the stress I experienced in the forty minutes between 9:35p, when the hack began, and 10:15p, when the fresh backup snapshot was identified and put into gear. Let’s breakdown each moment:

- 6:10p: The new db-mongo1 server was put into rotation as the MongoDB primary server. This machine was the first of the new, soon-to-be private cloud.

- 9:35p: Three hours later an automated hacking attempt opened a connection to the db-mongo1 server and immediately dropped the database. Downtime ensued.

- 10:15p: Before the former primary server could be placed into rotation, a snapshot of the server was made to ensure the backup would not delete itself upon reconnection. This cost a few hours of downtime, but saved nearly 18 hours of a day’s data by not forcing me to go into the daily backup archive.

- 3:00a: Snapshot completes, replication from original primary server to new db-mongo1 begins. What you see in the next hour and a half is what the transfer of the DB looks like in terms of bandwidth.

- 4:30a: Replication, which is inbound from the old primary server, completes, and now replication begins outbound on the new secondaries. NewsBlur is now back up.

The most important bit of information the above chart shows us is what a full database transfer looks like in terms of bandwidth. From 6p to 9:30p, the amount of data was the expected amount from a working primary server with multiple secondaries syncing to it. At 3a, you’ll see an enormous amount of data transfered.

This tells us that the hacker was an automated digital vandal rather than a concerted hacking attempt. And if we were to pay the ransom, it wouldn’t do anything because the vandals don’t have the data and have nothing to release.

We can also reason that the vandal was not able to access any files that were on the server outside of MongoDB due to using a recent version of MongoDB in a Docker container. Unless the attacker had access to a 0-day to both MongoDB and Docker, it is highly unlikely they were able to break out of the MongoDB server connection.

While the server was being snapshot, I used that time to figure out how the hacker got in.

2. How did NewsBlur’s MongoDB server get hacked?

Turns out the ufw firewall I enabled and diligently kept on a strict allowlist with only my internal servers didn’t work on a new server because of Docker. When I containerized MongoDB, Docker helpfully inserted an allow rule into iptables, opening up MongoDB to the world. So while my firewall was “active”, doing a sudo iptables -L | grep 27017 showed that MongoDB was open the world. This has been a Docker footgun since 2014.

To be honest, I’m a bit surprised it took over 3 hours from when I flipped the switch to when a hacker/vandal dropped NewsBlur’s MongoDB collections and pretended to ransom about 250GB of data. This is the work of an automated hack and one that I was prepared for. NewsBlur was back online a few hours later once the backups were restored and the Docker-made hole was patched.

It would make for a much more dramatic read if I was hit through a vulnerability in Docker instead of a footgun. By having Docker silently override the firewall, Docker has made it easier for developers who want to open up ports on their containers at the expense of security. Better would be for Docker to issue a warning when it detects that the most popular firewall on Linux is active and filtering traffic to a port that Docker is about to open.

The second reason we know that no data was taken comes from looking through the MongoDB access logs. With these rich and verbose logging sources we can invoke a pretty neat command to find everybody who is not one of the 100 known NewsBlur machines that has accessed MongoDB.

$ cat /var/log/mongodb/mongod.log | egrep -v "159.65.XX.XX|161.89.XX.XX|<< SNIP: A hundred more servers >>"

2021-06-24T01:33:45.531+0000 I NETWORK [listener] connection accepted from 171.25.193.78:26003 #63455699 (1189 connections now open)

2021-06-24T01:33:45.635+0000 I NETWORK [conn63455699] received client metadata from 171.25.193.78:26003 conn63455699: { driver: { name: "PyMongo", version: "3.11.4" }, os: { type: "Linux", name: "Linux", architecture: "x86_64", version: "5.4.0-74-generic" }, platform: "CPython 3.8.5.final.0" }

2021-06-24T01:33:46.010+0000 I NETWORK [listener] connection accepted from 171.25.193.78:26557 #63455724 (1189 connections now open)

2021-06-24T01:33:46.092+0000 I NETWORK [conn63455724] received client metadata from 171.25.193.78:26557 conn63455724: { driver: { name: "PyMongo", version: "3.11.4" }, os: { type: "Linux", name: "Linux", architecture: "x86_64", version: "5.4.0-74-generic" }, platform: "CPython 3.8.5.final.0" }

2021-06-24T01:33:46.500+0000 I NETWORK [conn63455724] end connection 171.25.193.78:26557 (1198 connections now open)

2021-06-24T01:33:46.533+0000 I NETWORK [conn63455699] end connection 171.25.193.78:26003 (1200 connections now open)

2021-06-24T01:34:06.533+0000 I NETWORK [listener] connection accepted from 185.220.101.6:10056 #63456621 (1266 connections now open)

2021-06-24T01:34:06.627+0000 I NETWORK [conn63456621] received client metadata from 185.220.101.6:10056 conn63456621: { driver: { name: "PyMongo", version: "3.11.4" }, os: { type: "Linux", name: "Linux", architecture: "x86_64", version: "5.4.0-74-generic" }, platform: "CPython 3.8.5.final.0" }

2021-06-24T01:34:06.890+0000 I NETWORK [listener] connection accepted from 185.220.101.6:21642 #63456637 (1264 connections now open)

2021-06-24T01:34:06.962+0000 I NETWORK [conn63456637] received client metadata from 185.220.101.6:21642 conn63456637: { driver: { name: "PyMongo", version: "3.11.4" }, os: { type: "Linux", name: "Linux", architecture: "x86_64", version: "5.4.0-74-generic" }, platform: "CPython 3.8.5.final.0" }

2021-06-24T01:34:08.018+0000 I COMMAND [conn63456637] dropDatabase config - starting

2021-06-24T01:34:08.018+0000 I COMMAND [conn63456637] dropDatabase config - dropping 1 collections

2021-06-24T01:34:08.018+0000 I COMMAND [conn63456637] dropDatabase config - dropping collection: config.transactions

2021-06-24T01:34:08.020+0000 I STORAGE [conn63456637] dropCollection: config.transactions (no UUID) - renaming to drop-pending collection: config.system.drop.1624498448i1t-1.transactions with drop optime { ts: Timestamp(1624498448, 1), t: -1 }

2021-06-24T01:34:08.029+0000 I REPL [replication-14545] Completing collection drop for config.system.drop.1624498448i1t-1.transactions with drop optime { ts: Timestamp(1624498448, 1), t: -1 } (notification optime: { ts: Timestamp(1624498448, 1), t: -1 })

2021-06-24T01:34:08.030+0000 I STORAGE [replication-14545] Finishing collection drop for config.system.drop.1624498448i1t-1.transactions (no UUID).

2021-06-24T01:34:08.030+0000 I COMMAND [conn63456637] dropDatabase config - successfully dropped 1 collections (most recent drop optime: { ts: Timestamp(1624498448, 1), t: -1 }) after 7ms. dropping database

2021-06-24T01:34:08.032+0000 I REPL [replication-14546] Completing collection drop for config.system.drop.1624498448i1t-1.transactions with drop optime { ts: Timestamp(1624498448, 1), t: -1 } (notification optime: { ts: Timestamp(1624498448, 5), t: -1 })

2021-06-24T01:34:08.041+0000 I COMMAND [conn63456637] dropDatabase config - finished

2021-06-24T01:34:08.398+0000 I COMMAND [conn63456637] dropDatabase newsblur - starting

2021-06-24T01:34:08.398+0000 I COMMAND [conn63456637] dropDatabase newsblur - dropping 37 collections

<< SNIP: It goes on for a while... >>

2021-06-24T01:35:18.840+0000 I COMMAND [conn63456637] dropDatabase newsblur - finished

The above is a lot, but the important bit of information to take from it is that by using a subtractive filter, capturing everything that doesn’t match a known IP, I was able to find the two connections that were made a few seconds apart. Both connections from these unknown IPs occured only moments before the database-wide deletion. By following the connection ID, it became easy to see the hacker come into the server only to delete it seconds later.

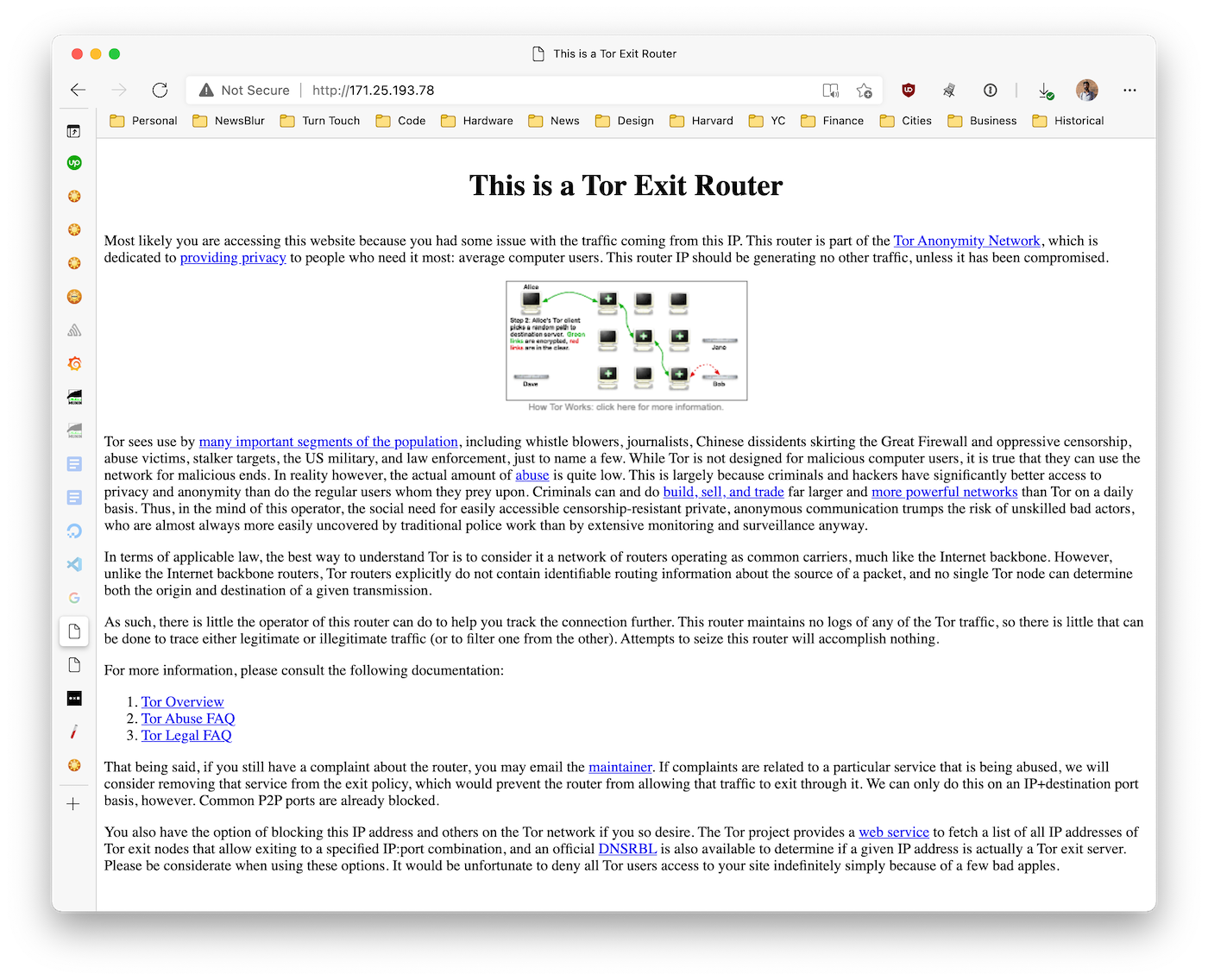

Interestingly, when I visited the IP address of the two connections above, I found a Tor exit router:

This means that it is virtually impossible to track down who is responsible due to the anonymity-preserving quality of Tor exit routers. Tor exit nodes have poor reputations due to the havoc they wreak. Site owners are split on whether to block Tor entirely, but some see the value of allowing anonymous traffic to hit their servers. In NewsBlur’s case, because NewsBlur is a home of free speech, allowing users in countries with censored news outlets to bypass restrictions and get access to the world at large, the continuing risk of supporting anonymous Internet traffic is worth the cost.

3. What will happen to ensure this doesn’t happen again?

Of course, being in support of free speech and providing enhanced ways to access speech comes at a cost. So for NewsBlur to continue serving traffic to all of its worldwide readers, several changes have to be made.

The first change is the one that, ironically, we were in the process of moving to. A VPC, a virtual private cloud, keeps critical servers only accessible from others servers in a private network. But in moving to a private network, I need to migrate all of the data off of the publicly accessible machines. And this was the first step in that process.

The second change is to use database user authentication on all of the databases. We had been relying on the firewall to provide protection against threats, but when the firewall silently failed, we were left exposed. Now who’s to say that this would have been caught if the firewall failed but authentication was in place. I suspect the password needs to be long enough to not be brute-forced, because eventually, knowing that an open but password protected DB is there, it could very possibly end up on a list.

Lastly, a change needs to be made as to which database users have permission to drop the database. Most database users only need read and write privileges. The ideal would be a localhost-only user being allowed to perform potentially destructive actions. If a rogue database user starts deleting stories, it would get noticed a whole lot faster than a database being dropped all at once.

But each of these is only one piece of a defense strategy. As this well-attended Hacker News thread from the day of the hack made clear, a proper defense strategy can never rely on only one well-setup layer. And for NewsBlur that layer was a allowlist-only firewall that worked perfectly up until it didn’t.

As usual the real heros are backups. Regular, well-tested backups are a necessary component to any web service. And with that, I’ll prepare to launch the big NewsBlur redesign later this week.

JS

> This tells us that the hacker was an automated digital vandal rather than a concerted hacking attempt. And if we were to pay the ransom, it wouldn’t do anything because the vandals don’t have the data and have nothing to release.

Guess they count on users not having enough monitoring to be able to confirm no data was exfil’d

First and foremost, thank you to 3mux's new co-maintainer @PotatoParser. He has significantly improved the code quality within 3mux, and he's to thank for a lot of the stability introduced in this release, enough that we finally feel comfortable to tagging an official v1 release.

This is the first official release since v0.3.0, and it includes significant performance, reliability, and usability improvements over the pre-release v1.0.1.

Migration note: the v1.1.0 client can connect to v1.0.1 sessions, but the client freezes upon running 3mux detach. No session data should be lost, but the client terminal will likely have to be restarted. Future releases will aim for better backward compatibility than this.

Build Support:

- 3mux can now be run through Nix flakes (requires Nix 2.4+) (#114)

Performance fixes:

- 3mux uses much less resources while idle, and it's also much more responsive (#106)

- 3mux is more efficient in general (#104 #97)

Display fixes:

htop,nanoandkakounenow work significantly better (#107)- Colors are more reliably handled, fixing issues seen when using

bash(#94) - An issue was fixed where characters were disappearing in

readline(#95) - The interactive session chooser prompt no longer messes up prompt upon Ctrl-C (#72)

- Line wrapping works better now (#77)

- Help page no longer depends upon tab width settings (#109)

- Fixed bug that caused pane divider lines to disappear (#118)

- Properly scroll when wrapping bottom right corner (#116)

Error handling:

- Errors are now more carefully handled to avoid broken states (#82 #100)

- Fuzzing is more extensively used to fing bugs (e.g. #92)

Changes:

- Cleaner UI and implementation for sessions (#91)

Jellyfin 10.7.0

Stable release for 10.7.0

GitHub project for release: https://github.com/orgs/jellyfin/projects/27

Binary assets: https://repo.jellyfin.org/releases/server

User-facing Features

- SyncPlay for TV shows and Music

- Significantly improved web performance due to ES6 upgrades, Webpack, and assets served with gzip compression

- Migration of further databases to new EFCore dtabase framework

- Redesigned OSD and Up Next dialog

- New PDF reader functionality

- New Comics (cbz/cbr) reader functionality

- New HDR thumbnails extraction functionality

- New HDR Tone mapping functionality with Nvidia NVENC, AMD AMF and Intel VAAPI (additional configuration is required)

- HEVC remuxing or transcoding over fMP4 on supported Apple devices (disabled by default)

- Allow custom fonts to be used for ASS/SSA subtitle rendering

- New defaullt library image style (generated on library scans)

- New QuickConnect functionality for (disabled by default)

- Support for limiting the number of user sessions

- Support for uploading subtitles

- Improved networking backend

- Upgrade to .NET SDK 5.0 for improved performance in the backend

- Fix issues with reboot script on Linux with Systemd

- Various fixes for iOS Safari and Edge Chromium browsers

- Various transcoding improvements

- Various bugfixes and minor improvements

- Various code cleanup

- Updated and improved plugin management interface, prevents bugs when upgrading as well as improve functionality

- Fixes some bugs with DLNA

Release Notes

- [ALL] Non-reversable database changes. Ensure you back up before upgrading.

- [ALL] TVDB support has been removed from the core server. If TVDB metadata was enabled on a library, this will be disabled. TVDB support can now be obtained through a separate plugin available in the official Plugin Catalog.

- [ALL] If you use a reverse proxy with X-Forwarded-For, and have a static proxy IP, consider setting this option in the Networking admin tab for more reliable parsing.

Client/Plugin (API/ABI) Developer Notes

- We have migrated from ServiceStack to ASP.NET. Web API endpoints no longer accept HTTP Form requests; everything must be

application/json. NOTE: Plugins that implement endpoints will also have to migrate. - Plugins must now target

net5.0. IHttpClientremoval: Now injectIHttpClientFactoryinstead.HttpExceptionremoval: Now catchHttpResponseExceptioninstead.- Services can be registered to the DI pipeline.

Please see the Jellyfin Development Matrix channel for questions or further details on these changes.

Known Bugs/Tracker for 10.7.1 hotfix

Bugs which are already known and being worked on are listed in this issue: jellyfin/jellyfin-meta#1

Changelog

GitHub Project: https://github.com/orgs/jellyfin/projects/27

jellyfin [599]

- #5409 [@ikomhoog] Changed string.Length == 0 to string.IsNullOrEmpty in case of null

- #5407 [@Bond-009] Fix casing CollectionType

- #5406 [@cvium] do not throw ArgumentNullException in TryCleanString

- #5402 [@Ullmie02] Use FileShare.None when creating files

- #5383 [@cvium] do not pick a linked item as primary when merging versions

- #5381 [@cvium] make sure network path substitution matches correctly

- #5377 [@cvium] Do not use language or imagelanguages when searching for images with TMDb

- #5375 [@crobibero] Specify defaults or set query parameter to nullable

- #5356 [@cvium] return false when providerid is null or empty

- #5345 [@BaronGreenback] Dual IP4 / IP6 server fails on non-windows platforms

- #5342 [@BaronGreenback] Corrected logging message

- #5339 [@Bond-009] Revert breaking change to HasProviderId

- #5315 [@BaronGreenback] Fix for environment variable JELLYFIN_PublishedServerUrl being ignored.

- #5312 [@BaronGreenback] Fix for #5280

- #5301 [@Bond-009] Fix unchecked input

- #5290 [@Bond-009] Fix possible null ref exception

- #5278 [@BaronGreenback] Fix for #5168

- #5275 [@BaronGreenback] Fixes #5148

- #5274 [@BaronGreenback] Fix for #5254

- #5270 [@Bond-009] TMDB: Also search with IMDB or TVDB Id if specified

- #5263 [@Bond-009] TMDB: Include year in search

- #5255 [@cvium] Validate the new username when renaming

- #5251 [@crobibero] Fix vpp null reference

- #5250 [@barronpm] Fix user renaming logic

- #5230 [@orryverducci] Fix double rate deinterlacing for some TS files

- #5217 [@dkanada] handle plugin manifests automatically

- #5216 [@dkanada] remove deprecated settings from server config

- #5208 [@crobibero] Add image file accept to openapi

- #5207 [@matthin] Default to English metadata during the setup wizard.

- #5200 [@crobibero] Update to dotnet 5.0.3

- #5188 [@cvium] Exclude BOM when writing meta.json plugin manifest

- #5181 [@BaronGreenback] Fixed IPHost.TryParse

- #5171 [@Ullmie02] Fix forgot password pin request

- #5154 [@crobibero] Don't skip hidden / system files when enumerating

- #5117 [@nyanmisaka] Make FRAME-RATE field culture invariant

- #5111 [@Larvitar] Remove season name from metadata result

- #5107 [@nyanmisaka] Fix for NVDEC decoder and improvements for VAAPI tonemap

- #5106 [@BaronGreenback] Fixes zero byte nfo files.

- #5105 [@crobibero] Add null check for ImageTags

- #5099 [@crobibero] Fix openapi nullable properties

- #5095 [@Bond-009] Fix GetOrderBy and add tests

- #5091 [@crobibero] Use ArrayModelBinder for sortBy and sortOrder

- #5090 [@Ullmie02] Catch TypeLoadException during plugin load

- #5073 [@BaronGreenback] Fix for 4933: Alternative ffmpeg fix

- #5069 [@crobibero] Add ability to mark query parameter as obsolete

- #5064 [@BaronGreenback] Plugin bug fixes

- #5062 [@crobibero] Fix delete log task

- #5031 [@crobibero] Update to dotnet 5.0.2

- #5027 [@crobibero] Add parameter to disable sending first episode as next up

- #5025 [@BaronGreenback] Fix DLNA PlayTo encoding issue

- #5004 [@dkanada] remove unused notification type

- #4997 [@crobibero] Require elevated auth to upload subtitles

- #4980 [@Ullmie02] Add additional chinese languages

- #4978 [@BaronGreenback] Fixes for multiple proxies

- #4976 [@BaronGreenback] Fixed DLNA Server on RC2

- #4970 [@BaronGreenback] Change split character

- #4968 [@ianjazz246] Fix library with music directly under artist folder

- #4962 [@thornbill] Fix capitalization of Playstate message

- #4961 [@crobibero] Fix potential null reference

- #4956 [@jceresini] Fix rpm package dependencies

- #4936 [@crobibero] Fix inverted SkipWhile

- #4935 [@ConfusedPolarBear] Remove used quick connect tokens

- #4920 [@crobibero] Attach correct Blurhash to BaseItemPerson

- #4911 [@Ullmie02] Change stable ci nuget build command

- #4906 [@Spacetech] Ignore inaccessible files & folders during library scans

- #4905 [@BaronGreenback] Null exception fix

- #4902 [@BaronGreenback] Fixed loopback subnet

- #4891 [@Artiume] Split resume function for Audiobooks

- #4890 [@nielsvanvelzen] Fix search hint endpoint error

- #4884 [@crobibero] Add JsonConverter for Nullable Guids

- #4874 [@MrTimscampi] Enable TMDB and OMDB by default

- #4872 [@BaronGreenback] Removed workaround code as web is now fixed.

- #4863 [@nyanmisaka] Fix boxes in library name backdrop

- #4861 [@crobibero] Fix null reference when logging

- #4860 [@nyanmisaka] Avoid transcoding to 3ch audio for HLS streaming

- #4859 [@Ullmie02] Don't build unstable Nuget packages on tags

- #4856 [@nyanmisaka] Fix some profiles for H264 AMF encoder

- #4855 [@crobibero] Initialize JsonSerializerOptions statically

- #4852 [@ryanpetris] SchedulesDirect no longer refreshes channels properly

- #4850 [@BaronGreenback] Null reference fix

- #4847 [@crobibero] Fix another key collision in MigrateDisplayPreferencesDatabase

- #4842 [@crobibero] Add JsonDateTimeConverter

- #4836 [@crobibero] Return dashboardTheme when requesting DisplayPreferences

- #4833 [@Ullmie02] Fix similar items endpoint for movies and TV

- #4828 [@joshuaboniface] Add static Linux builds for arm and musl

- #4824 [@crobibero] Add request parameters to OpenLiveStreamDto

- #4821 [@BaronGreenback] Fix: Disable dlna server API responses if dlna is disabled.

- #4819 [@crobibero] Set filename when downloading file

- #4816 [@nyanmisaka] Fix some video profiles for Android client

- #4807 [@nyanmisaka] Correct DLNA audio codecs for PS3 and PS4

- #4803 [@ryanpetris] Fix Live TV Recording Scheduling

- #4794 [@cvium] Convert from base64 when saving item images

- #4792 [@cvium] Add missing seasons during AfterMetadataRefresh

- #4789 [@crobibero] Fix get provider id extension

- #4781 [@crobibero] Use request body for mapping xml channels

- #4774 [@nyanmisaka] Fine tune some tone mapping params

- #4773 [@Artiume] Remove opf extension for book types

- #4771 [@crobibero] Use typed UserManager GetPreference

- #4769 [@crobibero] Check correct fetcher list for provider name

- #4767 [@nyanmisaka] Fix SSL certificate cannot be saved

- #4762 [@crobibero] Fix openapi file schema

- #4761 [@crobibero] Convert CreatePlaylist to use query params instead of body

- #4758 [@nyanmisaka] Fix landing screen options

- #4757 [@cvium] Remove ImageFetcherPostScanTask

- #4756 [@crobibero] Fix inverted condition when authenticating with an ApiKey

- #4753 [@crobibero] Update to dotnet 5.0.1

- #4751 [@nyanmisaka] Use larger batch size on mpegts to avoid corrupted thumbnails

- #4750 [@crobibero] Fix blueberry

- #4749 [@crobibero] Serialize GUID without dashes

- #4743 [@crobibero] Actually use library options when filtering metadata providers

- #4741 [@Bond-009] Add tests for HdHomerunHost.GetLineup

- #4738 [@Bond-009] Add tests for HdHomerunHost.GetModelInfo

- #4737 [@crobibero] Add missing EnsureSuccessStatusCode

- #4736 [@nyanmisaka] Fix custom library order

- #4735 [@crobibero] Fix JsonConverter recursion

- #4733 [@crobibero] Fix potential null reference in OMDB

- #4730 [@crobibero] Don't serialize empty GUID to null

- #4729 [@BaronGreenback] Disable DLNA when HTTPS is required

- #4726 [@BaronGreenback] Fix - Access Denied on using certificates in windows as user.

- #4724 [@BaronGreenback] Fix null reference error in Dlna PlayTo

- #4722 [@crobibero] Fix API forbidden response

- #4716 [@OancaAndrei] Update authorization policies for SyncPlay

- #4715 [@crobibero] Add number to bool json converter

- #4713 [@crobibero] Redirect robots.txt if hosting web content

- #4711 [@barronpm] Add required attributes to parameters

- #4710 [@OancaAndrei] Restore sessions in SyncPlay groups upon reconnection

- #4709 [@BaronGreenback] Improved plugin management functionality

- #4706 [@cvium] Only apply series image aspect ratio if episode/season has no primary image

- #4701 [@crobibero] Don't return plugin versions that target newer Jellyfin version

- #4699 [@crobibero] Fix CustomItemDisplayPreferences unique key collision in the migration

- #4678 [@BaronGreenback] Change logging level and message in NetworkManager

- #4675 [@BaronGreenback] Ability to use DNS names in KnownProxies

- #4672 [@cvium] Fix MergeVersions endpoint

- #4671 [@cvium] Clear KnownNetworks and KnownProxies if none are configured explicitly

- #4669 [@MrTimscampi] Fix NPM command in CI

- #4667 [@joshuaboniface] Remove obsolete erroring command

- #4662 [@joshuaboniface] Fix bad do in bump_version

- #4661 [@Bond-009] Remove spammy debug line

- #4660 [@crobibero] Add support for web serving .mem files

- #4653 [@crobibero] Optimize FavoritePersons query

- #4652 [@crobibero] Add support for custom item display preferences

- #4651 [@crobibero] Remove IIsoMounter and IsoMounter

- #4648 [@nyanmisaka] Optimize load plugin logs

- #4647 [@rhamzeh] fix: add Palestine to supported countries

- #4645 [@crobibero] Move OpenApiSecurityScheme to OperationFilter

- #4644 [@Bond-009] Minor improvements

- #4643 [@crobibero] Fix null reference when getting filters of an empty library

- #4638 [@crobibero] Don't die if folder doesn't have id

- #4636 [@BaronGreenback] [Fix for 10.7] Missed a config move

- #4633 [@crobibero] Use Guid as API parameter type where possible

- #4632 [@crobibero] Fix MusicBrainz request Accept header

- #4630 [@Bond-009] Add tests for GetUuid

- #4629 [@crobibero] Provide NoResult instead of Fail in CustomAuthenticationHandler

- #4628 [@MrTimscampi] Prevent GetUpNext from returning episodes in progress

- #4626 [@nyanmisaka] Do not extract audio stream bitrate info for videos from formatInfo

- #4625 [@crobibero] Fix OpenApi generation for BlurHash

- #4623 [@dependabot[bot]] Bump Moq from 4.15.1 to 4.15.2

- #4622 [@dependabot[bot]] Bump prometheus-net.DotNetRuntime from 3.4.0 to 3.4.1

- #4621 [@dependabot[bot]] Bump ServiceStack.Text.Core from 5.10.0 to 5.10.2

- #4620 [@nyanmisaka] Fix transcoding reasons report

- #4613 [@BaronGreenback] [Fix] NotificationType was never set in dlna event manager

- #4610 [@nyanmisaka] Extract tone mapped thumbnails for HDR videos

- #4609 [@Bond-009] Add more tests for JsonGuidConverter

- #4608 [@dkanada] Remove deprecated flag to disable music plugins

- #4607 [@nyanmisaka] Fix the I-frame image extraction filter string

- #4605 [@hawken93] Allow JsonGuidConverter to read null

- #4597 [@nyanmisaka] Add NEO OpenCL runtime for Intel Tone mapping

- #4595 [@MrTimscampi] Don't return first episodes in next up

- #4594 [@nyanmisaka] Remove one redundant 'hwaccel vaapi' string

- #4591 [@Bond-009] Clean up SchedulesDirect

- #4589 [@ConfusedPolarBear] [Fix] Test query value

- #4588 [@Bond-009] Remove Hex class as the BCL has one now

- #4582 [@crobibero] Use proper Named HttpClient for MusicBrainz requests

- #4580 [@crobibero] Specify default DateTimeKind from EFCore

- #4575 [@crobibero] Don't throw null reference if ContentType is null.

- #4574 [@joshuaboniface] Revert "Enable jellyfin.service unit on Fedora fresh install"

- #4570 [@nyanmisaka] Add Tonemapping for Intel VAAPI

- #4568 [@crobibero] Serialize Guid.Empty to null

- #4563 [@crobibero] Fix sending PlaybackInfo

- #4562 [@crobibero] Don't send activity event if notification type is null

- #4557 [@crobibero] Fix namespace and add attribute for ClientCapabilitiesDto

- #4554 [@joshuaboniface] Run explicit service start if restart failed

- #4553 [@joshuaboniface] Enable jellyfin.service unit on Fedora fresh install

- #4551 [@crobibero] Only trim file name if folder name is shorter

- #4550 [@crobibero] Handle invalid plugins

- #4548 [@orryverducci] Revert "Fix frame rate probing for interlaced MKV files"

- #4545 [@BaronGreenback] [Fix] Null Pointer in TmdbMovieProvider

- #4544 [@BaronGreenback] [Fix] Config option read from wrong place.

- #4539 [@crobibero] Add NullableEnumModelBinder and NullableEnumModelBinderProvider

- #4538 [@mario-campos] Implement CodeQL Static Analysis

- #4537 [@crobibero] Convert ClientCapabilities to a Dto with JsonConverters

- #4534 [@Bond-009] Remove UTF8 bom from some files

- #4533 [@Bond-009] Fix nullref

- #4526 [@crobibero] Fix marking item as played

- #4525 [@crobibero] Set DeleteTranscodeFileTask to trigger every 24h

- #4524 [@crobibero] Use sdk 5.x

- #4523 [@crobibero] Set sdk version to 5.0

- #4522 [@BaronGreenback] Set plugin version to that specified in the manifest

- #4519 [@crobibero] Write DateTimes in ISO8601 format for backwards compatibility.

- #4518 [@crobibero] Fix live tv hls playback

- #4517 [@BaronGreenback] [Fix] Fixed Plugin versioning in browser notifications

- #4516 [@oddstr13] Fix plugin old version cleanup

- #4515 [@barronpm] Clean up DeviceManager and Don't Store Capabilities on Disk

- #4514 [@Artiume] Update FFmpeg log

- #4513 [@BaronGreenback] Multi-repository plugins

- #4510 [@crobibero] Set default request accept headers

- #4509 [@crobibero] Fix null reference when saving plugin configuration

- #4507 [@BaronGreenback] Corrects spelling in comments

- #4504 [@crobibero] Fix Environment authorization policy

- #4503 [@Bond-009] Pass cancellation where possible

- #4502 [@crobibero] Use ALL the decompression methods

- #4501 [@ferferga] Don't scale extracted images and ffmpeg improvements

- #4500 [@oddstr13] Use .NET 5.0 in Nuget pipeline

- #4499 [@crobibero] Reduce RequestHelpers.Split usage and remove RequestHelpers.GetGuids

- #4497 [@crobibero] Fix docker and centos builds

- #4494 [@nyanmisaka] Add video range info to the title

- #4493 [@crobibero] Fix dockerfiles

- #4492 [@Artiume] update dotnet 5.0 buster image

- #4490 [@dependabot[bot]] Bump Mono.Nat from 3.0.0 to 3.0.1

- #4489 [@dependabot[bot]] Bump PlaylistsNET from 1.1.2 to 1.1.3

- #4488 [@dependabot[bot]] Bump Moq from 4.14.7 to 4.15.1

- #4487 [@crobibero] Upgrade all netcore3.1 to net5.0

- #4486 [@crobibero] Remove api client generator errors

- #4485 [@crobibero] Update user cache after updating user.

- #4480 [@crobibero] Remove custom HttpException

- #4478 [@Bond-009] Don't allocate single char arrays when possible

- #4477 [@nyanmisaka] Fix return type for GetAttachment

- #4476 [@crobibero] Fix plugin update exception

- #4475 [@crobibero] Fix api client ci condition

- #4474 [@crobibero] Convert array property to IReadOnlyList

- #4473 [@crobibero] Don't throw exception when converting values using binder or JsonConv…

- #4469 [@cvium] Change OPTIONS to POST and call SaveConfiguration in SetRepositories

- #4468 [@cvium] Semi-revert removal of Name for /Similar in openapi

- #4466 [@kayila] Fix #4465 by adding the missing extras folders.

- #4463 [@crobibero] Skip migration if user doesn't exist

- #4460 [@yodatak] Bump dependencies to Fedora 33

- #4458 [@crobibero] Upgrade to Net5

- #4456 [@martinek-stepan] Emby.Naming - nullable & code coverage

- #4452 [@crobibero] Add ModelBinder to ImageType

- #4450 [@cvium] Remove duplicate /Similar endpoints

- #4448 [@crobibero] Don't throw exception if name is null

- #4447 [@dependabot[bot]] Bump Serilog.Sinks.Graylog from 2.2.1 to 2.2.2

- #4446 [@dependabot[bot]] Bump Microsoft.NET.Test.Sdk from 16.7.1 to 16.8.0

- #4444 [@crobibero] Remove unstable npm ci task

- #4443 [@cvium] Remove OriginalAuthenticationInfo and add IsAuthenticated property

- #4440 [@neilsb] Perform hashing of Password for Schedules Direct on server

- #4437 [@crobibero] Add missing dlna attributes.

- #4434 [@BaronGreenback] Fixes #4423 - Fixes DLNA in unstable;

- #4432 [@nyanmisaka] Add initial support for HEVC over FMP4-HLS

- #4428 [@crobibero] Add x-jellyfin-version to openapi spec

- #4427 [@joshuaboniface] Reverse order of sudo and nohup

- #4426 [@joshuaboniface] Remove spurious argument to nohup

- #4425 [@joshuaboniface] Restore missing targetFolder

- #4424 [@Bond-009] Minor perf improvements

- #4422 [@crobibero] Add /Users/Me endpoint

- #4420 [@cvium] Fix Persons, Genres and Studios endpoints

- #4416 [@dkanada] Disable compatibility checks until they work again

- #4413 [@cvium] Rename itemIds to ids

- #4412 [@cvium] Save new display preferences

- #4411 [@crobibero] Fix endpoint authorization requirements

- #4410 [@crobibero] Set UserAgent when getting M3u playlist

- #4409 [@crobibero] Fix API separators

- #4408 [@crobibero] Dependency catch up

- #4406 [@joshuaboniface] Remove jellyfin-ffmpeg dep from server package

- #4405 [@nyanmisaka] Fix AAC direct streaming

- #4403 [@Bond-009] Http1AndHttp2 is the default, no need to explicitly enable it

- #4401 [@nyanmisaka] Respect music quality settings when transcoding

- #4395 [@barronpm] Convert some code in UserManager to async

- #4393 [@crobibero] Support IReadOnlyList in CommaDelimitedArrayModelBinder

- #4392 [@crobibero] Fix LiveTV TS playback

- #4391 [@crobibero] Support IReadOnlyList for JsonCommaDelimitedArrayConverter

- #4388 [@crobibero] Add missing slashes in ffmpeg argument.

- #4384 [@nyanmisaka] Fix HLS music playback on iOS

- #4378 [@barronpm] Fix possible null reference exception

- #4377 [@barronpm] Add caching to users

- #4375 [@crobibero] Fix setting duplicate keys from auth header

- #4371 [@cvium] Fix GET ScheduledTasks return value

- #4369 [@orryverducci] Fix frame rate probing for interlaced MKV files

- #4361 [@ssenart] Add FLAC and define the corresponding target sample rate

- #4350 [@crobibero] Fix .npmrc

- #4347 [@dependabot[bot]] Bump Moq from 4.14.6 to 4.14.7

- #4346 [@dependabot[bot]] Bump prometheus-net from 3.6.0 to 4.0.0

- #4342 [@crobibero] Add BaseItemManager

- #4341 [@Bond-009] Minor improvements

- #4339 [@BaronGreenback] Making default Plugin configurations accessible when developing.

- #4331 [@crobibero] Add npmAuthenticate task

- #4330 [@crobibero] Fix ApiKey authentication

- #4328 [@crobibero] Remove CommaDelimitedArrayModelBinderProvider

- #4326 [@crobibero] Automatically clean activity log database

- #4324 [@crobibero] Update to dotnet 3.1.9

- #4317 [@Bond-009] Fix AudioBookListResolver test coverage

- #4315 [@Jan-PieterBaert] Fix some warnings

- #4312 [@crobibero] Add comma delimited string to array json converter

- #4309 [@nielsvanvelzen] Make StartupWizardCompleted nullable in PublicSystemInfo

- #4306 [@crobibero] Remove references to legacy scripts

- #4305 [@crobibero] Convert image type string to enum.

- #4304 [@crobibero] Convert exclude location type string to enum.

- #4303 [@crobibero] Convert filters string to enum.

- #4302 [@crobibero] Convert field string to enum.

- #4301 [@crobibero] Fix comma delimited array model binder

- #4300 [@crobibero] Fix ci npm install order

- #4299 [@crobibero] Fix api client CI

- #4298 [@crobibero] Remove TheTVDB plugin from server source.

- #4292 [@crobibero] Add missing general commands

- #4286 [@Bond-009] Minor improvements to tmdb code

- #4285 [@cvium] Fix IWebSocketListener service registration

- #4284 [@cvium] Fix playbackstart not triggering in the new eventmanager

- #4281 [@crobibero] Fix registry name and link

- #4279 [@joshuaboniface] Make MaxActiveSessions not nullable

- #4277 [@dependabot[bot]] Bump Moq from 4.14.5 to 4.14.6

- #4276 [@cvium] SecurityException should return 403

- #4275 [@anthonylavado] Fix Transcode Cleanup Schedule

- #4274 [@barronpm] Rewrite Activity Log Backend

- #4273 [@joshuaboniface] Fix RPM spec again

- #4271 [@joshuaboniface] Improve handling of apiclient generator

- #4269 [@joshuaboniface] Add user max sessions options

- #4268 [@Bond-009] Improve GroupInfo class

- #4267 [@cvium] Disable invalid auth provider

- #4266 [@Maxr1998] Log stream type and codec for missing direct play profile

- #4265 [@KonH] Remove unnecessary null checks in some places

- #4264 [@Camc314] Add missing properties to typescript axios generator

- #4263 [@cvium] Defer image pre-fetching until the end of a refresh/scan

- #4262 [@anthonylavado] Remove Windows legacy files

- #4261 [@Spacetech] Make MusicBrainzAlbumProvider thread safe and fix retry logic

- #4260 [@crobibero] Allow server to return .data files

- #4259 [@ConfusedPolarBear] Accept ImageFormat as API parameter

- #4257 [@Bond-009] Add tests for deserializing guids

- #4255 [@crobibero] Generate document file for openapi spec in CI

- #4254 [@BaronGreenback] Fix for #4241: Plugin config initialisation.

- #4253 [@BaronGreenback] DI in plugins

- #4252 [@skyfrk] Convert supportedCommands strings to enums

- #4249 [@EraYaN] Publish OpenAPI spec for master and tagged releases

- #4248 [@crobibero] Manually register models used in websocket messages.

- #4247 [@crobibero] Update all on-disk plugins

- #4243 [@jlechem] Removing string we don't use anymore.

- #4242 [@Spacetech] Increase library scan and metadata refresh speed

- #4236 [@ConfusedPolarBear] Fix some warnings

- #4233 [@dependabot[bot]] Bump Mono.Nat from 2.0.2 to 3.0.0

- #4232 [@dependabot[bot]] Bump TvDbSharper from 3.2.1 to 3.2.2

- #4231 [@dependabot[bot]] Bump Serilog.Sinks.Graylog from 2.1.3 to 2.2.1

- #4230 [@dependabot[bot]] Bump BlurHashSharp.SkiaSharp from 1.1.0 to 1.1.1

- #4229 [@dependabot[bot]] Bump BlurHashSharp from 1.1.0 to 1.1.1

- #4228 [@dependabot[bot]] Bump IPNetwork2 from 2.5.224 to 2.5.226

- #4227 [@dependabot[bot]] Bump Swashbuckle.AspNetCore.ReDoc from 5.5.1 to 5.6.3

- #4226 [@dependabot[bot]] Bump Swashbuckle.AspNetCore from 5.5.1 to 5.6.3

- #4225 [@Spacetech] Check response status code before saving images

- #4222 [@Spacetech] Use ConcurrentDictionary's in DirectoryService

- #4221 [@Spacetech] Fix InvalidOperationException in TvdbSeriesProvider.MapSeriesToResult

- #4220 [@Spacetech] Fix invalid operation exception in TvdbEpisodeImageProvider.GetImages

- #4219 [@Spacetech] Increase initial scan speed for music libraries

- #4217 [@crobibero] Properly handle null structs in json

- #4213 [@cvium] Add ProgressiveFileStream

- #4212 [@BaronGreenback] Null Pointer fix: BaseControlHandler.cs

- #4211 [@BaronGreenback] Null Pointer Fix : PlayToController.cs

- #4210 [@nielsvanvelzen] Use enum for WebSocket message types

- #4209 [@cvium] Add Dto to ForgotPassword

- #4208 [@cvium] Fix Identify by renaming route parameter to match function argument

- #4207 [@joshuaboniface] Revamp the main README

- #4205 [@cvium] Fix aspect ratio calculation sometimes returning 0 or 1

- #4204 [@cvium] Add series image aspect ratio when ep/season is missing an image

- #4202 [@cvium] Migrate the TMDb providers to the TMDbLib library

- #4200 [@ryanpetris] HDHomeRun: Preemptively throw a LiveTvConflictException

- #4199 [@ryanpetris] Fix stream performance when opening/closing new streams.

- #4194 [@nvllsvm] Optimize images

- #4192 [@nielsvanvelzen] Use GeneralCommandType enum in GeneralCommand

- #4189 [@Bond-009] Minor improvements

- #4187 [@BaronGreenback] Fix for #4184 when no FFMPEG path set.

- #4186 [@BaronGreenback] Fixes #4185 : FFMPeg version match exception.

- #4183 [@Ullmie02] Fix TMDB Season Images

- #4182 [@ryanpetris] Fix HD Home Run streaming

- #4178 [@hoanghuy309] Update LocalizationManager.cs

- #4177 [@cvium] Remove dummy season and missing episode provider

- #4176 [@MrTimscampi] Update SkiaSharp.NativeAssets.Linux to 2.80.2

- #4173 [@BaronGreenback] Unstable: Various controller fixes.

- #4171 [@nyanmisaka] Add tonemapping for AMD AMF

- #4170 [@BaronGreenback] Plugin versioning - amended for plugins without meta.json

- #4169 [@stanionascu] Playback (direct-stream/transcode) of BDISO/BDAV containers

- #4164 [@spooksbit] Removed browser auto-launch functionality

- #4163 [@Bond-009] Minor improvements

- #4162 [@BaronGreenback] Fix for #4161: BaseUrl in DLNA

- #4156 [@androiddevnotes] Fix typos

- #4145 [@dependabot[bot]] Bump SkiaSharp from 2.80.1 to 2.80.2

- #4142 [@olsh] Fix parameters validation in ImageProcessor.GetCachePath

- #4139 [@BaronGreenback] DLNA MediaRegistrar - static and commented.

- #4138 [@BaronGreenback] DLNA ContentManager - static and commented.

- #4137 [@BaronGreenback] DLNA ConnectionManager - static and commented.

- #4136 [@BaronGreenback] DLNA Classes - No code change, just added commenting to classes.

- #4128 [@derchu] Update content rating from thetvdb

- #4126 [@crobibero] update to dotnet 3.1.8

- #4125 [@BaronGreenback] Networking 2 (Cumulative PR) - Swapping over to new NetworkManager

- #4121 [@cvium] Normalize application paths

- #4118 [@SegiH] Change default value for allow duplicates in playlist option to False

- #4116 [@cvium] Add Known Proxies to system configuration

- #4114 [@crobibero] Add new files to rpm build

- #4108 [@Bond-009] Minor performance improvements to item saving

- #4106 [@Keridos] some testing for AudioBook

- #4103 [@Bond-009] Fix some warnings

- #4102 [@cvium] Skip startup message for /system/ping

- #4096 [@crobibero] Fix catching authentication exception

- #4094 [@crobibero] Fix redirection

- #4093 [@crobibero] Fix api routes

- #4092 [@crobibero] Add missing FromRoute, Required attribute

- #4084 [@BaronGreenback] Unstable: PlayTo corruption url fix

- #4082 [@cromefire] More expressive names for the VideoStream API

- #4079 [@dependabot[bot]] Bump SQLitePCLRaw.bundle_e_sqlite3 from 2.0.3 to 2.0.4

- #4078 [@Bond-009] Minor improvements

- #4077 [@BaronGreenback] Simplified Code: Removed code which was never used.

- #4076 [@Bond-009] Fix some warnings

- #4075 [@BaronGreenback] Simplified Code: Removed code which was never used.

- #4074 [@cvium] Fix null exception in tmdb episode provider

- #4073 [@Bond-009] Fix ObjectDisposedException

- #4071 [@Bond-009] Fix sln file

- #4070 [@crobibero] Add ci task to publish api client

- #4069 [@crobibero] Make all FromRoute required

- #4068 [@barronpm] Fix Plugin Events and Clean Up InstallationManager.cs

- #4067 [@barronpm] DisplayPreferences fixes

- #4065 [@BaronGreenback] Bug Fix : DLNA Server advertising

- #4063 [@BaronGreenback] Our of Memory fix when streaming large files

- #4062 [@BaronGreenback] Fix for #4059

- #4061 [@BaronGreenback] Fix for #4060

- #4057 [@crobibero] Add flag for startup completed

- #4055 [@Ullmie02] Enable HTTP Range Processing (Fix seeking)

- #4054 [@lmaonator] Fix TVDB plugin not handling absolute display order

- #4053 [@thornbill] Fix aac mime-type

- #4051 [@crobibero] Replace swagger logo with jellyfin logo

- #4048 [@crobibero] Remove GenerateDocumentationFile

- #4047 [@crobibero] Use efcore library for health check

- #4046 [@EraYaN] Enable code coverage and upload OpenAPI spec.

- #4045 [@crobibero] Add db health check

- #4043 [@cvium] Split HttpListenerHost into middlewares

- #4042 [@EraYaN] Fixes for CI Nuget package pushing and CI triggers

- #4041 [@EraYaN] Add the item path to the ItemLookupInfo class

- #4039 [@cvium] Remove ServiceStack and related stuff

- #4037 [@crobibero] Set openapi schema type to file where possible

- #4035 [@crobibero] Fix apidoc routes with base url

- #4034 [@barronpm] Fix all warnings in Jellyfin.Data

- #4033 [@crobibero] Readd nullable number converters

- #4031 [@Bond-009] Fix some warnings

- #4030 [@crobibero] Remove IHttpClient

- #4028 [@crobibero] Properly verify cache duration

- #4027 [@cvium] Fix model binding in UpdateLibraryOptions

- #4026 [@dependabot[bot]] Bump prometheus-net.DotNetRuntime from 3.3.1 to 3.4.0

- #4024 [@dependabot[bot]] Bump IPNetwork2 from 2.5.211 to 2.5.224

- #4022 [@Bond-009] Fix incorrect usage of ArrayPool

- #4018 [@barronpm] Library Entity Cleanup

- #4013 [@crobibero] Allow CORS domains to be configured

- #4010 [@cromefire] Fix wrong OpenAPI auth header value

- #4008 [@crobibero] Include xml docs when publishing

- #4002 [@crobibero] Fix partial library and channel access

- #4001 [@brianjmurrell] Add an empty %files section to main package

- #3999 [@PrplHaz4] [Permissions] Fix for individual channel plugins #2858

- #3988 [@crobibero] Use proper SPDX Identifier

- #3984 [@crobibero] Use Prerelease System.Text.Json

- #3983 [@Bond-009] Fix incorrect adding of user agent

- #3977 [@barronpm] Make LibraryController.GetDownload async

- #3976 [@nyanmisaka] Expose max_muxing_queue_size to user

- #3975 [@nyanmisaka] Increase the max muxing queue size for ffmpeg

- #3961 [@crobibero] Ignore null json values

- #3959 [@Bond-009] Enable TreatWarningsAsErrors for Emby.Data in Release

- #3958 [@Bond-009] Enable TreatWarningsAsErrors for MediaBrowser.Controller in Release

- #3955 [@Bond-009] Make some methods async

- #3954 [@Ullmie02] Use backdrop with library name as library thumbnail

- #3953 [@crobibero] bump DotNet.Glob

- #3951 [@crobibero] Add nullable int32, int64 json converters

- #3950 [@crobibero] Fix dlna play to

- #3947 [@Bond-009] Fix all warnings in Emby.Dlna

- #3946 [@crobibero] Clean up output formatters

- #3943 [@Bond-009] Simplify FFmpeg detection code

- #3942 [@Ullmie02] Reduce warnings in Emby.Dlna

- #3941 [@crobibero] Conver all remaining form request to body

- #3939 [@Bond-009] Make MediaBrowser.MediaEncoding warnings free

- #3938 [@crobibero] Fix conflicting audio routes

- #3935 [@crobibero] Add Default Http Client

- #3932 [@crobibero] Add support for custom api-doc css

- #3928 [@Mygod] Add 1440p to the mix

- #3925 [@crobibero] Remove IHttpClient from Providers

- #3910 [@barronpm] Event Rewrite (Part 1)

- #3908 [@crobibero] Use proper mediatypename

- #3907 [@crobibero] Fix DLNA Routes

- #3903 [@crobibero] Add xml output formatter

- #3899 [@crobibero] Install specific plugin version if requested

- #3898 [@crobibero] Return int64 in json as number

- #3895 [@crobibero] Remove IHttpClient from Jellyfin.Api

- #3894 [@barronpm] Remove ListHelper.cs

- #3892 [@barronpm] Minor fixes to LiveTvMediaSourceProvider

- #3891 [@barronpm] Remove unused methods in IDtoService

- #3889 [@Ullmie02] Build Unstable NuGet packages

- #3886 [@crobibero] bump deps

- #3880 [@DirtyRacer1337] Fix year parsing

- #3879 [@cvium] Populate ThemeVideoIds and ThemeSongIds

- #3877 [@orryverducci] Deinterlacing improvements

- #3874 [@danieladov] Fix MergeVersions()

- #3872 [@crobibero] Fix setting user policy

- #3871 [@Ullmie02] Allow plugins to define their own api endpoints

- #3868 [@dependabot[bot]] Bump ServiceStack.Text.Core from 5.9.0 to 5.9.2

- #3867 [@dependabot[bot]] Bump TvDbSharper from 3.2.0 to 3.2.1

- #3866 [@dependabot[bot]] Bump Microsoft.NET.Test.Sdk from 16.6.1 to 16.7.0

- #3865 [@dependabot[bot]] Bump Swashbuckle.AspNetCore.ReDoc from 5.3.3 to 5.5.1

- #3863 [@EraYaN] Add nohup and continueOnError to the Collect Artifacts task

- #3861 [@crobibero] API Fixes

- #3860 [@cvium] Fix collages

- #3859 [@crobibero] Fix Requirement assigned to Handler

- #3858 [@cvium] Fix startup wizard redirect

- #3854 [@danieladov] Fix Split versions

- #3851 [@barronpm] Clean up LibraryChangedNotifier.

- #3849 [@barronpm] Make DisplayPreferencesManager Scoped

- #3846 [@YouKnowBlom] Avoid including stray commas in HLS codecs field

- #3841 [@Bond-009] Fix warnings

- #3840 [@barronpm] Fix MemoryCache Usage.

- #3838 [@Bond-009] MemoryStream optimizations

- #3837 [@cvium] Fix BaseItems not being cached in-memory

- #3836 [@cvium] Remove rate limit from TMDb provider

- #3835 [@cvium] Throw HttpException when tvdb sends us crap data

- #3834 [@cvium] Make external ids nullable in TMDb

- #3831 [@joshuaboniface] Bump to .NET Core SDK 3.1.302

- #3824 [@barronpm] Clean up TunerHost Classes

- #3822 [@EraYaN] Merge the args and commands item for the artifact collection

- #3820 [@Bond-009] Fix some warnings

- #3816 [@cvium] Change OnRefreshStart and OnRefreshComplete logging levels to debug

- #3812 [@barronpm] Merge API Migration into master

- #3810 [@AlfHou] Fix README links and note about setup wizard

- #3809 [@Bond-009] Minor improvements

- #3806 [@dkanada] Disable compatibility checks for now

- #3805 [@dependabot[bot]] Bump Mono.Nat from 2.0.1 to 2.0.2

- #3804 [@dependabot[bot]] Bump Serilog.AspNetCore from 3.2.0 to 3.4.0

- #3803 [@dependabot[bot]] Bump sharpcompress from 0.25.1 to 0.26.0

- #3802 [@dependabot[bot]] Bump PlaylistsNET from 1.0.6 to 1.1.2

- #3801 [@michael9dk] Update README.md (fix broken links)

- #3795 [@anthonylavado] Update to newer Jellyfin.XMLTV

- #3792 [@cvium] TMDb: Change Budget and Revenue to long to avoid overflow

- #3790 [@cvium] Remove some unnecessary string allocations

- #3784 [@barronpm] Minor fixes to ActivityManager

- #3782 [@Bond-009] Minor fixes for websocket code

- #3774 [@EraYaN] Add a much shorter timeout to the CollectArtifacts job

- #3772 [@EraYaN] Updated SkiaSharp to 2.80.1 and replace resize code to fix bad quality

- #3769 [@dkanada] Remove useless order step for intros

- #3761 [@cvium] Fix DI memory leak

- #3760 [@thornbill] Fix inverted logic for LAN IP detection

- #3759 [@AlfHou] Change 'nowebcontent' flag to 'nowebclient' flag in readme

- #3757 [@cvium] Update BlurHashSharp and set max size to 128x128

- #3747 [@barronpm] Use Memory Cache

- #3740 [@Bond-009] Optimize Substring and StringBuilder usage

- #3728 [@nyanmisaka] adjust priority in outputSizeParam cutter

- #3727 [@K900] Fix #3624

- #3725 [@joshuaboniface] Flip quoting in variable set command

- #3724 [@joshuaboniface] Bump master version to 10.7.0 for next release

- #3723 [@joshuaboniface] Get and tag with the actual release version in CI

- #3720 [@joshuaboniface] Fix bump_version so it works properly

- #3711 [@yrjyrj123] Fix the problem that hardware decoding cannot be used on macOS.

- #3704 [@oddstr13] Don't ignore dot directories or movies/episodes with sample in their name.

- #3703 [@oddstr13] Allow space in username

- #3699 [@oddstr13] Fix embedded subtitles

- #3690 [@MichaIng] Fix left /usr/bin/jellyfin symlink on removal and typo

- #3684 [@Bond-009] Fix warnings

- #3683 [@nyanmisaka] Allows to provide multiple fallback fonts for client to render subtitles

- #3679 [@barronpm] Use System.Text.Json in DefaultPasswordResetProvider

- #3678 [@barronpm] Remove Unused Dependencies.

- #3677 [@barronpm] Fixed compilation error on master.

- #3675 [@ferferga] fix typo in debian's config file

- #3671 [@Bond-009] Make UNIX socket configurable

- #3666 [@barronpm] Use System.Text.Json in LiveTvManager

- #3665 [@barronpm] Use interfaces in app host constructors

- #3664 [@Bond-009] Make CreateUser async

- #3663 [@crobibero] Add missing usings to UserManager

- #3660 [@crobibero] Force plugin config location

- #3659 [@Bond-009] Optimize StringBuilder.Append calls

- #3657 [@Bond-009] Review usage of string.Substring (part 1)

- #3649 [@thornbill] Skip image processing for live tv sources

- #3646 [@barronpm] Make IncrementInvalidLoginAttemptCount async.

- #3642 [@crobibero] Try adding plugin repository again

- #3634 [@crobibero] fix built in plugin js

- #3632 [@azlm8t] tvdb: Log path on lookup errors

- #3620 [@BaronGreenback] Fix for #3607 and #3515

- #3616 [@crobibero] Allow migration to optionally run on fresh install

- #3615 [@nyanmisaka] Fix QSV device creation on Comet Lake

- #3613 [@Bond-009] Replace \d with [0-9] in ffmpeg detection and scan code

- #3609 [@Bond-009] Fix warnings

- #3604 [@joshuaboniface] Fix bad Debuntu dependencies

- #3602 [@crobibero] Fix username case change

- #3598 [@barronpm] Clean up ProviderManager.cs

- #3597 [@barronpm] Jellyfin.Drawing.Skia Cleanup

- #3595 [@Bond-009] Improve DescriptionXmlBuilder

- #3578 [@barronpm] Migrate Display Preferences to EF Core

- #3577 [@crobibero] Specify plugin repo on plugin installation

- #3576 [@HelloWorld017] Fix SAMI UTF-16 Encoding Bug

- #3552 [@BaronGreenback] Fixes #3551 (Notifications Serialization error)

- #3532 [@Ullmie02] Add support for binding to Unix socket

- #3521 [@sachk] Fix support for mixed-protocol subtitles

- #3508 [@BaronGreenback] Part 1: nullable Emby.DLNA

- #3442 [@nyanmisaka] Tonemapping function relying on OpenCL filter and NVENC HEVC decoder

- #3401 [@BaronGreenback] Fix for windows plug-in upgrades issue: #1623

- #3366 [@barronpm] Remove UserManager.AddParts

- #3216 [@rotvel] Try harder at detecting ffmpeg version and enable the validation

- #3196 [@ferferga] Remove "download images in advance" option

- #3194 [@OancaAndrei] SyncPlay for TV series (and Music)

- #3086 [@redSpoutnik] Add Post subtitle in API

- #3053 [@rigtorp] Add additional resolver tests

- #2888 [@ConfusedPolarBear] Add quick connect (login without typing password)

- #2788 [@ThatNerdyPikachu] Use embedded title for other track types

jellyfin-web [474]

- jellyfin/jellyfin-web#2482 [@cvium] don't use Locations as an indicator for AddLibrary

- jellyfin/jellyfin-web#2473 [@thornbill] Add hash to bundle urls for cache busting

- jellyfin/jellyfin-web#2470 [@pgeorgi] browser.js: Avoid misdetecting Chrome OS as OS X

- jellyfin/jellyfin-web#2461 [@thornbill] Remove iOS bandwidth limit

- jellyfin/jellyfin-web#2443 [@dmitrylyzo] Fix attachment delivery urls

- jellyfin/jellyfin-web#2442 [@dkanada] minor improvements to plugin pages

- jellyfin/jellyfin-web#2417 [@MrLemur] Change babel.config.js sourceType to unamiguous

- jellyfin/jellyfin-web#2378 [@dkanada] update style for active sessions

- jellyfin/jellyfin-web#2375 [@ferferga] fix: message appearing after adding repositories

- jellyfin/jellyfin-web#2374 [@cvium] reject play access validation promise

- jellyfin/jellyfin-web#2358 [@Alcatraz077] Allows Search On Tizen

- jellyfin/jellyfin-web#2357 [@thornbill] Fix epub player height

- jellyfin/jellyfin-web#2356 [@ferferga] fix: notched devices area not covered

- jellyfin/jellyfin-web#2353 [@thornbill] Fix scaling in comics player

- jellyfin/jellyfin-web#2350 [@nyanmisaka] Options for enhanced NVDEC and VPP tonemap

- jellyfin/jellyfin-web#2344 [@ferferga] refactor: remove unused imports

- jellyfin/jellyfin-web#2343 [@dkanada] fix image alignment on plugin cards

- jellyfin/jellyfin-web#2327 [@MrChip53] Edit admin dashboard menu for plugins

- jellyfin/jellyfin-web#2323 [@jarnedemeulemeester] Fix replay icon not getting replaced with play_arrow icon

- jellyfin/jellyfin-web#2318 [@thornbill] Fix removed ButtonDelete key

- jellyfin/jellyfin-web#2313 [@dmitrylyzo] Fix browser detection: Safari vs Tizen

- jellyfin/jellyfin-web#2312 [@jarnedemeulemeester] Use local version of Noto Sans if available

- jellyfin/jellyfin-web#2311 [@nielsvanvelzen] Disable multi download option

- jellyfin/jellyfin-web#2309 [@ferferga] fix(card): white flashing images

- jellyfin/jellyfin-web#2306 [@thornbill] Fix tiny card icons

- jellyfin/jellyfin-web#2293 [@thornbill] Fix latest tab links for tv and music

- jellyfin/jellyfin-web#2290 [@MrTimscampi] Add Chromecast error messages to the locales

- jellyfin/jellyfin-web#2288 [@joshuaboniface] Bump API client to 1.6.0

- jellyfin/jellyfin-web#2286 [@Artiume] Split Audiobook Resume

- jellyfin/jellyfin-web#2283 [@thornbill] Allow decimal entry for bitrate on mobile

- jellyfin/jellyfin-web#2280 [@Artiume] Add missing Languages to Web

- jellyfin/jellyfin-web#2269 [@dkanada] Fix issue with double click fullscreen

- jellyfin/jellyfin-web#2265 [@MrTimscampi] Sort items by premiere date on the details page

- jellyfin/jellyfin-web#2263 [@MrTimscampi] Fix OSD gradients not letting pointer events through

- jellyfin/jellyfin-web#2260 [@thornbill] Fix chevron centering on home section titles

- jellyfin/jellyfin-web#2258 [@thornbill] Restore the dashboard theme option

- jellyfin/jellyfin-web#2247 [@thornbill] Always allow stopping via the action menu

- jellyfin/jellyfin-web#2246 [@thornbill] Fix layout issues on mobile item details

- jellyfin/jellyfin-web#2244 [@Artiume] Fix Continue Listening

- jellyfin/jellyfin-web#2242 [@thornbill] Replace bash prepare script with node version

- jellyfin/jellyfin-web#2240 [@thornbill] Remove duplicate try/catch

- jellyfin/jellyfin-web#2239 [@thornbill] Disable browser hack rule for sass files

- jellyfin/jellyfin-web#2238 [@thornbill] Fix restart button being shown when unsupported

- jellyfin/jellyfin-web#2237 [@thornbill] Fix layout of plugin cards

- jellyfin/jellyfin-web#2236 [@thornbill] Fix touch support in epub reader

- jellyfin/jellyfin-web#2234 [@thornbill] Use Noto Sans from Fontsource

- jellyfin/jellyfin-web#2225 [@BaronGreenback] Plugin manager changes

- jellyfin/jellyfin-web#2224 [@Delgan] Fix possible HLSError (BufferFullError) on Firefox

- jellyfin/jellyfin-web#2222 [@nyanmisaka] Modify some tone mapping related strings

- jellyfin/jellyfin-web#2220 [@dependabot[bot]] Bump ini from 1.3.5 to 1.3.7

- jellyfin/jellyfin-web#2219 [@crobibero] Set Content-Type header when creating a playlist

- jellyfin/jellyfin-web#2218 [@thornbill] Fix style issues on dashboard page

- jellyfin/jellyfin-web#2217 [@nyanmisaka] Landing screen options clean up

- jellyfin/jellyfin-web#2216 [@dmitrylyzo] Fix canPlay for Live TV

- jellyfin/jellyfin-web#2215 [@dmitrylyzo] Fix multiplication of event listeners on Live TV pages

- jellyfin/jellyfin-web#2214 [@dmitrylyzo] Fix LiveTV group anchors

- jellyfin/jellyfin-web#2213 [@thornbill] Fix sonarqube bugs

- jellyfin/jellyfin-web#2211 [@thornbill] Fix invalid dlna profile path

- jellyfin/jellyfin-web#2210 [@nyanmisaka] Set the step of subtitle offset slider to 0.1

- jellyfin/jellyfin-web#2202 [@thornbill] Remove reference to sharing help element

- jellyfin/jellyfin-web#2195 [@OancaAndrei] Fix SyncPlay switching to next item in queue

- jellyfin/jellyfin-web#2188 [@dmitrylyzo] Fix anchor click action and plugin configuration page URL

- jellyfin/jellyfin-web#2186 [@Maxr1998] Fix plugin loader for function definitions in window

- jellyfin/jellyfin-web#2183 [@dmitrylyzo] SyncPlay, don't use bad ApiClient

- jellyfin/jellyfin-web#2181 [@MrTimscampi] Remove non-existing UserData field from requests

- jellyfin/jellyfin-web#2177 [@anthonylavado] Update the API Client version

- jellyfin/jellyfin-web#2175 [@thornbill] Fix invalid guide link

- jellyfin/jellyfin-web#2174 [@thornbill] Fix comics player

- jellyfin/jellyfin-web#2173 [@Maxr1998] Simplify hiding menu items based on supported features

- jellyfin/jellyfin-web#2172 [@Maxr1998] Fix local bind address using wrong config value

- jellyfin/jellyfin-web#2171 [@nyanmisaka] Fix the issue where the bitrate option is always Auto

- jellyfin/jellyfin-web#2165 [@Maxr1998] Fix select server item in drawer menu

- jellyfin/jellyfin-web#2164 [@thornbill] Fix more link issues

- jellyfin/jellyfin-web#2163 [@thornbill] Prevent default submit event on add plugin repo form

- jellyfin/jellyfin-web#2162 [@h1dden-da3m0n] update(ci): dependabot config from v1 to v2

- jellyfin/jellyfin-web#2161 [@thornbill] Add item path to card data to support canPlay check

- jellyfin/jellyfin-web#2160 [@thornbill] Fix multiple hashes added to route

- jellyfin/jellyfin-web#2159 [@thornbill] Plugin manager improvements

- jellyfin/jellyfin-web#2158 [@thornbill] Fix opening links with middle click or open in new tab

- jellyfin/jellyfin-web#2156 [@thornbill] Prevent merge conflicts action from running on forks

- jellyfin/jellyfin-web#2155 [@thornbill] Improve QuickConnect ux

- jellyfin/jellyfin-web#2153 [@thornbill] Add github action to label PRs with merge conflicts

- jellyfin/jellyfin-web#2152 [@thornbill] Add config option to include cookies in playback requests

- jellyfin/jellyfin-web#2150 [@thornbill] Fix plugin initialization for dynamic imports

- jellyfin/jellyfin-web#2149 [@thornbill] Make disabled rules trigger warnings

- jellyfin/jellyfin-web#2148 [@nyanmisaka] Fix the BufferFullError on Chromium based browsers

- jellyfin/jellyfin-web#2147 [@dependabot-preview[bot]] Bump expose-loader from 1.0.1 to 1.0.3

- jellyfin/jellyfin-web#2146 [@dependabot-preview[bot]] Bump @babel/core from 7.12.7 to 7.12.9

- jellyfin/jellyfin-web#2145 [@dependabot-preview[bot]] Bump core-js from 3.7.0 to 3.8.0

- jellyfin/jellyfin-web#2144 [@dependabot-preview[bot]] Bump babel-loader from 8.2.1 to 8.2.2

- jellyfin/jellyfin-web#2143 [@dependabot-preview[bot]] Bump webpack from 5.6.0 to 5.9.0

- jellyfin/jellyfin-web#2142 [@thornbill] Reenable no unresolved import rule and fix playlist imports

- jellyfin/jellyfin-web#2141 [@thornbill] Add api key to remote image urls

- jellyfin/jellyfin-web#2140 [@thornbill] Fix use of global ApiClient in authenticate middleware

- jellyfin/jellyfin-web#2139 [@nielsvanvelzen] Support async plugin loading from window

- jellyfin/jellyfin-web#2138 [@nyanmisaka] Fix the overlap in iOS music view and the hidden nowPlayingBar

- jellyfin/jellyfin-web#2137 [@nielsvanvelzen] Pass plugin name to pluginManager.loadPlugin

- jellyfin/jellyfin-web#2135 [@nyanmisaka] Do not use AC3 for audio transcoding if AAC and MP3 are supported

- jellyfin/jellyfin-web#2131 [@dmitrylyzo] Fix babel support for legacy browsers

- jellyfin/jellyfin-web#2130 [@hawken93] fix login autocomplete

- jellyfin/jellyfin-web#2129 [@dmitrylyzo] Prevent doubleclick on buttons from bubbling to video for fullscreen (alternative)

- jellyfin/jellyfin-web#2128 [@nyanmisaka] Fix the inconsistent header button size in dashboard

- jellyfin/jellyfin-web#2127 [@thornbill] Remove standalone.js and broken navigation

- jellyfin/jellyfin-web#2126 [@thornbill] Fix arabic import from date-fns

- jellyfin/jellyfin-web#2124 [@thornbill] Add stylelint for sass files

- jellyfin/jellyfin-web#2122 [@thornbill] Use static imports for html templates

- jellyfin/jellyfin-web#2121 [@thornbill] Fix fetcher settings html import

- jellyfin/jellyfin-web#2120 [@thornbill] Revert change to base font size

- jellyfin/jellyfin-web#2119 [@nyanmisaka] Show tonemap options for VAAPI

- jellyfin/jellyfin-web#2118 [@hawken93] simplify server address candidates

- jellyfin/jellyfin-web#2117 [@thornbill] Add linters to github actions

- jellyfin/jellyfin-web#2116 [@thornbill] Disable chromecast in unsupported browsers

- jellyfin/jellyfin-web#2111 [@dkanada] fix possible issue with server detection

- jellyfin/jellyfin-web#2110 [@dependabot-preview[bot]] Bump @babel/preset-env from 7.12.1 to 7.12.7

- jellyfin/jellyfin-web#2109 [@dependabot-preview[bot]] Bump stylelint from 13.7.2 to 13.8.0

- jellyfin/jellyfin-web#2108 [@dependabot-preview[bot]] Bump copy-webpack-plugin from 6.3.0 to 6.3.2

- jellyfin/jellyfin-web#2107 [@dependabot-preview[bot]] Bump sass-loader from 10.0.5 to 10.1.0

- jellyfin/jellyfin-web#2106 [@dependabot-preview[bot]] Bump eslint from 7.13.0 to 7.14.0

- jellyfin/jellyfin-web#2105 [@dependabot-preview[bot]] Bump @babel/core from 7.12.3 to 7.12.7

- jellyfin/jellyfin-web#2104 [@thornbill] Remove unused files and dependencies

- jellyfin/jellyfin-web#2103 [@BaronGreenback] [Fix] Removed SeriesInfo attribute.

- jellyfin/jellyfin-web#2101 [@oddstr13] Take baseurl into account, use original url, not LocalAddress

- jellyfin/jellyfin-web#2100 [@BaronGreenback] [RC Fix] Hide some network options until next release

- jellyfin/jellyfin-web#2098 [@thornbill] Fix missing jellyfin-noto resources

- jellyfin/jellyfin-web#2097 [@thornbill] Fix standalone crash due to missing apiclient

- jellyfin/jellyfin-web#2096 [@mario-campos] Implement CodeQL Static Analysis

- jellyfin/jellyfin-web#2095 [@thornbill] Fix epub player issues

- jellyfin/jellyfin-web#2094 [@nyanmisaka] Fix Airplay in Safari

- jellyfin/jellyfin-web#2093 [@thornbill] Fix pdfjs import

- jellyfin/jellyfin-web#2092 [@hawken93] restore Assets

- jellyfin/jellyfin-web#2086 [@thornbill] Fix missing index.html in prod build

- jellyfin/jellyfin-web#2085 [@hawken93] Just return original server address in chromecastHelper

- jellyfin/jellyfin-web#2083 [@BaronGreenback] Multi-repository plugin modification

- jellyfin/jellyfin-web#2080 [@thornbill] Add prepare script to allow CI to skip build

- jellyfin/jellyfin-web#2079 [@joshuaboniface] Revert "pull fonts from official repository"

- jellyfin/jellyfin-web#2078 [@nyanmisaka] Tweak OSD duration display for narrow screen

- jellyfin/jellyfin-web#2077 [@nyanmisaka] Add descriptions for Remux

- jellyfin/jellyfin-web#2076 [@dependabot-preview[bot]] Bump babel-loader from 8.1.0 to 8.2.1

- jellyfin/jellyfin-web#2075 [@dependabot-preview[bot]] Bump webpack-stream from 6.1.0 to 6.1.1

- jellyfin/jellyfin-web#2072 [@dependabot-preview[bot]] Bump gulp-sourcemaps from 2.6.5 to 3.0.0

- jellyfin/jellyfin-web#2071 [@joshuaboniface] Add Debian conffiles with config.json

- jellyfin/jellyfin-web#2069 [@Artiume] Show Remux as a Playback Method

- jellyfin/jellyfin-web#2064 [@nyanmisaka] Add initial profile for HEVC over FMP4-HLS

- jellyfin/jellyfin-web#2061 [@cvium] Remove advanced toggle for library editor

- jellyfin/jellyfin-web#2054 [@dependabot-preview[bot]] Bump webpack from 5.3.2 to 5.4.0

- jellyfin/jellyfin-web#2053 [@dependabot-preview[bot]] Bump core-js from 3.6.5 to 3.7.0

- jellyfin/jellyfin-web#2052 [@dependabot-preview[bot]] Bump eslint from 7.12.1 to 7.13.0

- jellyfin/jellyfin-web#2051 [@dependabot-preview[bot]] Bump query-string from 6.13.6 to 6.13.7

- jellyfin/jellyfin-web#2050 [@dependabot-preview[bot]] Bump whatwg-fetch from 3.4.1 to 3.5.0

- jellyfin/jellyfin-web#2049 [@dependabot-preview[bot]] Bump css-loader from 5.0.0 to 5.0.1

- jellyfin/jellyfin-web#2044 [@neilsb] Fix Schedules Direct Listings

- jellyfin/jellyfin-web#2041 [@Artiume] Sort Show Genres by Random

- jellyfin/jellyfin-web#2040 [@Artiume] Sort Movies Genres by Random

- jellyfin/jellyfin-web#2039 [@ThibaultNocchi] Photos fullscreen button + hiding exit and arrows buttons on autoplay

- jellyfin/jellyfin-web#2038 [@cvium] Create 1 lazyloader observer per collection type

- jellyfin/jellyfin-web#2037 [@dependabot-preview[bot]] Bump node-sass from 4.14.1 to 5.0.0

- jellyfin/jellyfin-web#2036 [@dependabot-preview[bot]] Bump gulp-terser from 1.4.0 to 1.4.1

- jellyfin/jellyfin-web#2035 [@dependabot-preview[bot]] Bump eslint from 7.12.0 to 7.12.1

- jellyfin/jellyfin-web#2034 [@dependabot-preview[bot]] Bump swiper from 6.3.4 to 6.3.5

- jellyfin/jellyfin-web#2032 [@dependabot-preview[bot]] Bump webpack from 5.2.0 to 5.3.2

- jellyfin/jellyfin-web#2031 [@dependabot-preview[bot]] Bump pdfjs-dist from 2.4.456 to 2.5.207

- jellyfin/jellyfin-web#2030 [@dependabot-preview[bot]] Bump file-loader from 6.1.1 to 6.2.0

- jellyfin/jellyfin-web#2029 [@cvium] Fix collectionEditor creation in movies

- jellyfin/jellyfin-web#2028 [@danieladov] Hide progress bar when playing theme media

- jellyfin/jellyfin-web#2027 [@dmitrylyzo] Remove custom hover style

- jellyfin/jellyfin-web#2026 [@nyanmisaka] Adjust the default audio codec to AAC for HLS streaming

- jellyfin/jellyfin-web#2025 [@dmitrylyzo] No external link for TV

- jellyfin/jellyfin-web#2021 [@minobp] Add loading Japanese json files

- jellyfin/jellyfin-web#2018 [@dkanada] Remove broken features from user settings

- jellyfin/jellyfin-web#2017 [@dependabot-preview[bot]] Bump webpack from 5.0.0 to 5.2.0

- jellyfin/jellyfin-web#2016 [@dependabot-preview[bot]] Bump headroom.js from 0.11.0 to 0.12.0

- jellyfin/jellyfin-web#2015 [@dependabot-preview[bot]] Bump hls.js from 0.14.15 to 0.14.16

- jellyfin/jellyfin-web#2014 [@dependabot-preview[bot]] Bump eslint from 7.11.0 to 7.12.0

- jellyfin/jellyfin-web#2013 [@dependabot-preview[bot]] Bump confusing-browser-globals from 1.0.9 to 1.0.10

- jellyfin/jellyfin-web#2012 [@dependabot-preview[bot]] Bump swiper from 6.3.3 to 6.3.4

- jellyfin/jellyfin-web#2011 [@dependabot-preview[bot]] Bump howler from 2.2.0 to 2.2.1

- jellyfin/jellyfin-web#2010 [@dkanada] pull fonts from official repository

- jellyfin/jellyfin-web#2004 [@dependabot-preview[bot]] Bump css-loader from 4.3.0 to 5.0.0

- jellyfin/jellyfin-web#2003 [@dependabot-preview[bot]] Bump @babel/eslint-parser from 7.11.5 to 7.12.1

- jellyfin/jellyfin-web#2002 [@dependabot-preview[bot]] Bump browser-sync from 2.26.12 to 2.26.13

- jellyfin/jellyfin-web#2001 [@dependabot-preview[bot]] Bump @babel/eslint-plugin from 7.11.5 to 7.12.1

- jellyfin/jellyfin-web#2000 [@dependabot-preview[bot]] Bump @babel/preset-env from 7.11.5 to 7.12.1

- jellyfin/jellyfin-web#1999 [@dependabot-preview[bot]] Bump @babel/plugin-proposal-private-methods from 7.10.4 to 7.12.1

- jellyfin/jellyfin-web#1998 [@dependabot-preview[bot]] Bump @babel/core from 7.11.6 to 7.12.3

- jellyfin/jellyfin-web#1997 [@dependabot-preview[bot]] Bump @babel/polyfill from 7.11.5 to 7.12.1

- jellyfin/jellyfin-web#1996 [@dependabot-preview[bot]] Bump @babel/plugin-transform-modules-amd from 7.10.5 to 7.12.1

- jellyfin/jellyfin-web#1995 [@dependabot-preview[bot]] Bump query-string from 6.13.5 to 6.13.6

- jellyfin/jellyfin-web#1994 [@vitorsemeano] Migration to ES6

- jellyfin/jellyfin-web#1993 [@sparky8251] Removed excess quality options to make menu more manageable

- jellyfin/jellyfin-web#1987 [@dependabot-preview[bot]] Bump eslint from 7.10.0 to 7.11.0

- jellyfin/jellyfin-web#1986 [@dependabot-preview[bot]] Bump file-loader from 6.1.0 to 6.1.1

- jellyfin/jellyfin-web#1985 [@dependabot-preview[bot]] Bump style-loader from 1.3.0 to 2.0.0

- jellyfin/jellyfin-web#1984 [@dependabot-preview[bot]] Bump swiper from 6.3.2 to 6.3.3

- jellyfin/jellyfin-web#1982 [@dependabot-preview[bot]] Bump hls.js from 0.14.13 to 0.14.15

- jellyfin/jellyfin-web#1981 [@dependabot-preview[bot]] Bump webpack from 4.44.2 to 5.0.0

- jellyfin/jellyfin-web#1980 [@dkanada] Minor UX improvements

- jellyfin/jellyfin-web#1979 [@BaronGreenback] Dashboard change to Network page to support new NetworkManager

- jellyfin/jellyfin-web#1975 [@dkanada] Manual changes for no-var eslint rule

- jellyfin/jellyfin-web#1974 [@dkanada] Run eslint to fix most var instances

- jellyfin/jellyfin-web#1970 [@dependabot-preview[bot]] Bump style-loader from 1.2.1 to 1.3.0

- jellyfin/jellyfin-web#1968 [@dependabot-preview[bot]] Bump query-string from 6.13.4 to 6.13.5

- jellyfin/jellyfin-web#1966 [@joshuaboniface] Implement frontend component of max user sessions

- jellyfin/jellyfin-web#1963 [@dependabot-preview[bot]] Bump stylelint from 13.7.1 to 13.7.2

- jellyfin/jellyfin-web#1962 [@dependabot-preview[bot]] Bump eslint-plugin-import from 2.22.0 to 2.22.1

- jellyfin/jellyfin-web#1961 [@dependabot-preview[bot]] Bump del from 5.1.0 to 6.0.0

- jellyfin/jellyfin-web#1960 [@dependabot-preview[bot]] Bump hls.js from 0.14.12 to 0.14.13

- jellyfin/jellyfin-web#1959 [@dependabot-preview[bot]] Bump jellyfin-apiclient from 1.4.1 to 1.4.2

- jellyfin/jellyfin-web#1958 [@dependabot-preview[bot]] Bump query-string from 6.13.2 to 6.13.4

- jellyfin/jellyfin-web#1957 [@dependabot-preview[bot]] Bump eslint from 7.9.0 to 7.10.0

- jellyfin/jellyfin-web#1956 [@dependabot-preview[bot]] Bump swiper from 6.2.0 to 6.3.2

- jellyfin/jellyfin-web#1955 [@dmitrylyzo] Fix ES6 import - browser

- jellyfin/jellyfin-web#1954 [@dmitrylyzo] Fix subtitles display on Tizen 2.x

- jellyfin/jellyfin-web#1953 [@dmitrylyzo] Fix 'file:' fetching (bundled apps)

- jellyfin/jellyfin-web#1951 [@dmitrylyzo] Fix theme video (animated backdrops)

- jellyfin/jellyfin-web#1949 [@dmitrylyzo] Retranslate UI instead of recreating it

- jellyfin/jellyfin-web#1948 [@fffrankieh] Fix Enter key in Edit Metadata dialog

- jellyfin/jellyfin-web#1946 [@cvium] Fix PIN request and reset route

- jellyfin/jellyfin-web#1945 [@OancaAndrei] SyncPlay for TV series (and Music)

- jellyfin/jellyfin-web#1942 [@nvllsvm] Optimize images

- jellyfin/jellyfin-web#1941 [@dependabot-preview[bot]] Bump webpack from 4.44.1 to 4.44.2

- jellyfin/jellyfin-web#1940 [@dependabot-preview[bot]] Bump html-webpack-plugin from 4.4.1 to 4.5.0

- jellyfin/jellyfin-web#1938 [@dependabot-preview[bot]] Bump sortablejs from 1.10.2 to 1.12.0

- jellyfin/jellyfin-web#1934 [@cvium] Remove missing ep checkbox in library options

- jellyfin/jellyfin-web#1933 [@nyanmisaka] Update strings for tonemapping on AMD AMF

- jellyfin/jellyfin-web#1929 [@dependabot-preview[bot]] Bump css-loader from 4.2.2 to 4.3.0

- jellyfin/jellyfin-web#1928 [@dependabot-preview[bot]] Bump hls.js from 0.14.11 to 0.14.12